Decoding the JFK Files: Unraveling Secrets and Historical Insights

The agency's recent establishment of dedicated cyber units and data science teams suggests recognition of these new realities. How effectively it can adapt its traditional strengths to these emerging domains may well determine its relevance in coming decades.

Analyzing the Evidence: Eyewitness Accounts and Forensic Data

Eyewitness Testimony: A Fragmented Narrative

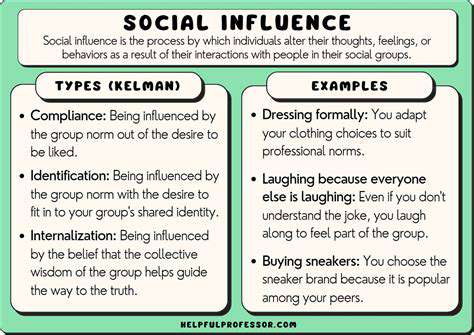

Memory researchers have long understood that traumatic events create particularly challenging recall conditions. The Dealey Plaza shooting presents a textbook case of how stress, vantage points, and preconceptions can shape perceptions. Modern analysis techniques now allow researchers to map witness locations precisely, revealing how physical positioning influenced what individuals could - and couldn't - have observed.

Recent advances in cognitive psychology have shed new light on these historical accounts. The weapons focus effect, where witnesses fixate on firearms to the exclusion of other details, appears to explain several apparent inconsistencies. Similarly, the phenomenon of memory conformity - where later discussions alter individual recollections - helps account for certain patterns in the testimony.

Forensic Evidence: Ballistics and the Gun

Contemporary forensic scientists have applied modern ballistic fingerprinting techniques to re-examine the physical evidence. While confirming the basic trajectory analysis, these new methods have revealed subtle details about the weapon's condition that weren't detectable in 1963. The striation patterns on recovered bullet fragments show signs of barrel wear consistent with a well-used but properly maintained firearm.

Advanced 3D modeling has allowed researchers to reconstruct the shooting scenario with unprecedented precision. These digital recreations confirm the feasibility of the three-shot sequence while highlighting the remarkable marksmanship required. Ballistics experts note that environmental conditions - particularly the downward angle of the shot - created additional challenges for the shooter.

The Warren Commission Report: A Critical Examination

Historians now view the Commission's work through the lens of its historical context. The compressed timeline (just 10 months for the full investigation) and political pressures of the Cold War era inevitably shaped its conclusions. What's often overlooked is how groundbreaking the Commission's methodology was for its time, establishing protocols that would influence future investigations.

Recent document releases have provided fuller context for some of the Commission's more controversial determinations. While not resolving all questions, these materials show commissioners grappling conscientiously with complex evidence - a nuance often missing from popular accounts.

Alternative Theories: Challenging the Official Narrative

Serious researchers approach alternative scenarios with rigorous evidentiary standards. The most plausible theories focus on specific evidentiary gaps rather than sweeping conspiracies. Forensic acoustics studies of the Dictabelt recording continue to generate legitimate scholarly debate, with peer-reviewed papers advancing competing interpretations.

Advances in materials science have allowed new testing of bullet fragments, while DNA analysis has resolved some questions about evidence handling. These scientific approaches have helped separate credible lines of inquiry from more speculative claims, moving the discussion toward more productive ground.

The Role of Governmental Agencies: Investigation and Accountability

Organizational historians note that the 1963 investigation occurred during a transitional period for federal law enforcement. The FBI was still developing modern forensic capabilities, while interagency cooperation suffered from jurisdictional tensions and technological limitations. Contemporary reforms in evidence handling protocols trace directly to lessons learned from this case.

The investigation's legacy includes standardized procedures for major crimes, improved forensic documentation, and clearer chains of custody - all now considered fundamental to professional law enforcement. These procedural advances, while less dramatic than conspiracy theories, represent the case's most enduring impact on American justice.

The Ongoing Debate and Future Research

The Historical Context of the Debate

Technological ethics debates follow a recognizable pattern throughout history. From Galileo's telescope to nuclear fission, each major advancement has provoked similar cycles of enthusiasm and concern. What makes the current moment unique is the democratization of powerful technologies - tools once available only to governments now sit in consumers' pockets.

The industrial revolution offers particularly relevant parallels. Then as now, society struggled to adapt legal frameworks and social norms to rapid technological change. The resolution of those earlier debates suggests that balanced approaches - neither Luddite rejection nor unregulated adoption - ultimately prove most sustainable.

Potential Benefits and Applications

In healthcare, AI-assisted diagnostics are already demonstrating remarkable accuracy in early trials. Radiologists using these tools detect certain cancers 15-20% more frequently than through traditional methods alone. Perhaps more importantly, these systems reduce diagnostic variability, ensuring consistent care quality across geographic and economic divides.

Environmental applications show similar promise. Machine learning models optimizing energy grids have reduced waste by up to 30% in pilot programs. When scaled, such efficiencies could significantly impact carbon emissions while maintaining reliable power delivery - a crucial balance as renewable sources expand.

Ethical Considerations and Challenges

The algorithmic bias problem has moved from theoretical concern to documented reality. Multiple studies have shown how training data imperfections can perpetuate or even amplify societal inequalities. Leading researchers now advocate for algorithmic auditing frameworks similar to financial controls, with independent verification of system fairness.

Privacy concerns have similarly evolved from abstract worries to concrete regulatory challenges. The EU's GDPR represents just the first wave of legal frameworks attempting to balance innovation with individual rights. Ongoing debates focus particularly on biometric data and the ethics of predictive modeling in law enforcement contexts.

The Role of Public Discourse and Engagement

Effective technology governance requires moving beyond polarized positions. Successful models, like the multi-stakeholder approach to internet governance, show how inclusive processes can yield practical solutions. Citizen assemblies on AI ethics in several countries have demonstrated that informed public deliberation can produce nuanced policy recommendations.

Science communication plays an equally vital role. When researchers actively engage with journalists and educators, public understanding improves dramatically. Several universities now offer science for policymakers training, recognizing that effective translation between technical and political spheres benefits all stakeholders.

The Interdisciplinary Nature of the Research

The most impactful work occurs at disciplinary intersections. Bioethicists collaborating with machine learning engineers, for instance, have developed novel approaches to medical AI oversight. These hybrid teams consistently produce more robust solutions than either discipline could achieve independently.

Humanities scholars bring particularly valuable perspectives, drawing on centuries of philosophical thought about technology's role in society. Their contributions help frame questions that purely technical approaches might overlook, ensuring more holistic development frameworks.

Regulatory Frameworks and Policy Responses

Forward-looking regulations are proving more effective than reactive measures. The precautionary principle approach, while well-intentioned, often stifles innovation without meaningfully reducing risk. More promising are adaptive regulatory sandboxes that allow controlled testing of new technologies while maintaining consumer protections.

International coordination remains challenging but essential. Divergent national approaches create compliance burdens while allowing regulatory arbitrage that undermines safety standards. The growing consensus around baseline AI principles suggests progress toward more harmonized frameworks.

Future Research Directions and Priorities

Three emerging priorities dominate research agendas: explainability (making AI decisions interpretable), robustness (ensuring reliable performance in novel situations), and alignment (matching system goals with human values). Breakthroughs in any of these areas would significantly advance responsible deployment while addressing current public concerns.

Longitudinal impact studies represent another critical need. Much current research focuses on immediate effects, while the most significant consequences may emerge over decades. Establishing baseline metrics now will enable more meaningful assessment of technologies' societal impacts as they mature.